One of the major trends in scientific research is the increase in data volume. Science gets increasingly data driven with datasets on the tera-scale level. Once only particle physicists produced these huge amounts of data, but now many other branches of science do too: Advances in recording brain activity produce new challenges to brain researchers, data from large Internet of Things sensor networks needs to be processed, and computational linguists want to analyse data sets like Common Crawl, the huge public data set providing a copy of the Internet.

Obviously, these researchers are not necessarily computer scientists or highly skilled programmers, so they need help to crunch their data. And moreover, they want their data analysed as fast as possible, so it has to be analysed were it is stored, to avoid long waits due to data transfer between storage and processing facilities. For instance, to move 100 TB of data on 10 Gbp/s link, takes 22.7 hours with full bandwidth.

Making collaboration easier

Developers from the Norwegian R&E network Uninett are now preparing a new offering, “Data Analytics as a Service” (DaaS), to supplement their existing portfolio of storage, compute and cloud services for dedicated research e-infrastructures.

This kind of service is new to the R&E network community, and Uninett head of development Olav Kvittem and programmer Gurvinder Singh hope the new service will help research progress at a faster pace and make collaboration between researchers easier. According to Kvittem and Singh, the field of data analytics has developed a growing set of methods and tools to analyse big data. But to spread them to a wider group of users you have to simplify the use of them.

The challenge with data analysis is that preparing data for analysis is a big part of analysis and poses a significant challenge in itself, they say.

“Our goal is to provide a service that enables processing of large data sets in parallel using the data locality principle. Among other things this gives researchers the possibility to share research data as well as the processing pipeline with other research communities. So it is a step towards reproduceable research.”

Moving out of the lab

Later this year Data Analytics as a Service will move out of the lab and into production, making it accessible to Norwegian students and researchers and to the Nordic research community as well.

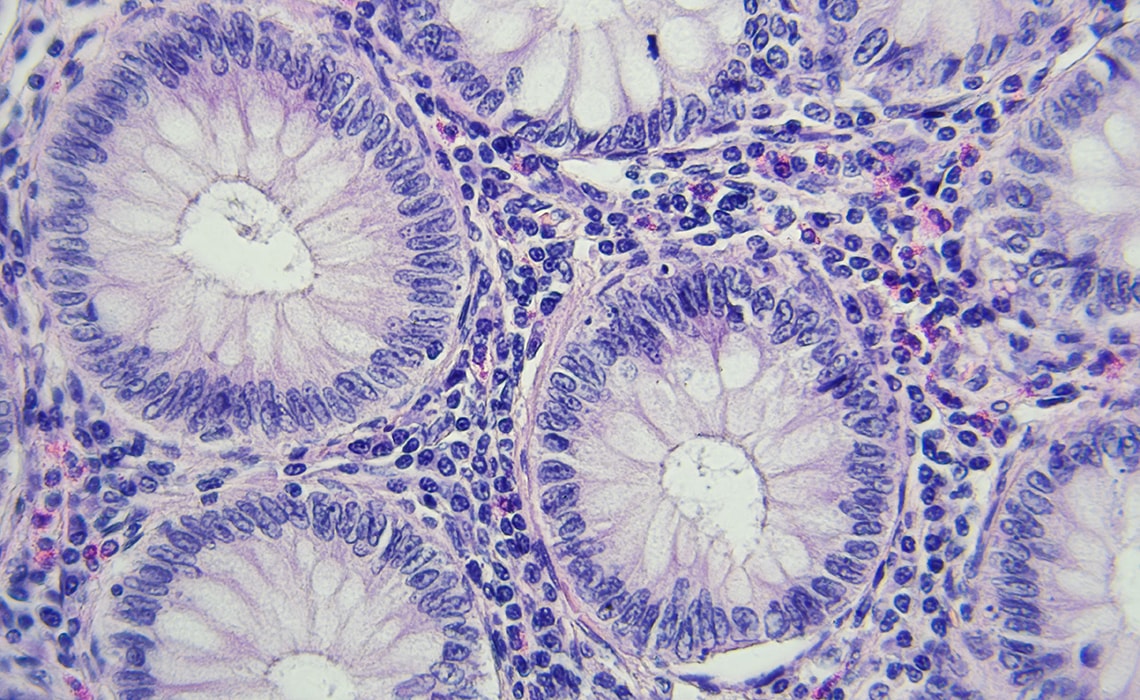

Olav Kvittem and Gurvinder Singh have already helped researchers at the Proteomics and Metabolomics Core Facility (PROMEC) in Trondheim, Norway, analyse Protein data sets to recognize proteins in cancer samples. And eight other Norwegian research institutes have expressed interest in using the service, once it is made available to a wider audience.

Olav Kvittem and Gurvinder Singh hope, that in the near future their Data Analytics as a Service offering will be used widely in fields like Proteomics, Computational Linguistics and Environmental Sciences. Not least because DaaS enables researchers to not only analyse very large data sets and publish the results, but also share their datasets together with the whole processing pipeline.

“Imagine you are a researcher reading a scientific paper and thinking about some modifications, which you would want to test out in the current published paper. It would be great if the author provides you a link to a portal and you can rerun the whole analysis with your modifications on a subset or the full dataset.

“This is what DaaS is offering, and as a consequence, results will be reproducible, collaboration between researchers will become much easier, and ultimately research will progress at a faster pace.”